Designing for Autonomy: UX Principles for Agentic AI Systems

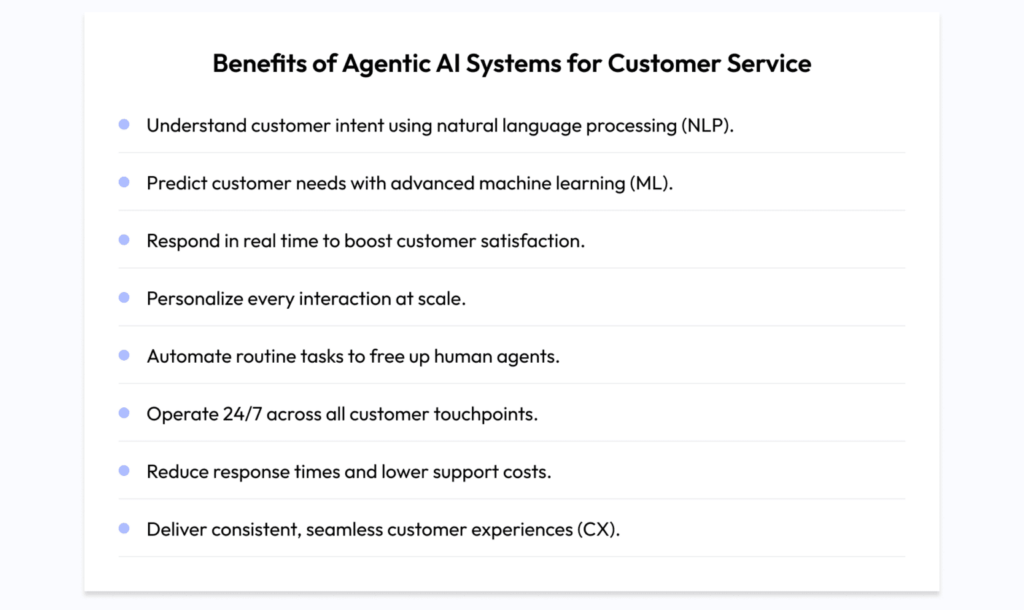

As artificial intelligence evolves from reactive chatbots to autonomous agents, design teams are facing a new frontier: creating user experiences for systems that not only respond but act on their own.

Autonomous agents—capable of goal-seeking, multi-step reasoning, and contextual adaptation—represent a dramatic leap from co-pilots and traditional assistants. They can pursue tasks with minimal supervision, call tools independently, and even collaborate with other agents in a shared runtime.

For UX practitioners, this evolution introduces a complex design challenge: How do we craft interfaces that support trust, transparency, and control in systems we don’t fully script?

From Interfacing With AI to Interfacing With Intelligence

In the Invisible Machines podcast, Robb Wilson—founder of OneReach.ai and author of The Age of Invisible Machines—summarizes this shift bluntly:

“We’re no longer designing for the machine—we’re designing for what the machine does when we’re not looking.”

This reality reshapes UX at a foundational level. Previously, designers created flows assuming the system would only act when prompted. But in agentic systems, autonomy is not a feature—it’s the default behavior.

These agents don’t live inside neat input/output boxes. They initiate tasks, communicate asynchronously, and may alter the state of applications or data without user awareness unless careful affordances are designed into the experience.

Key UX Challenges for Autonomous Agents

Designing for agentic AI surfaces four high-stakes areas for UX and product teams:

1. Trust and Transparency

Users must understand what agents are doing, why they’re doing it, and when they’ll act. Without these cues, autonomy feels like unpredictability.

- Provide real-time status indicators (“Your travel assistant is rescheduling your flight”).

- Include rationales or intent summaries (“Rebooking due to weather disruption forecasted”).

- Offer view history and audit logs for actions taken autonomously.

“The more autonomous a system becomes, the more visible its reasoning must be,” writes Wilson in The Age of Invisible Machines.

2. State and Context Management

Agents act across time, apps, and modalities. That means users need visibility into what state the agent is in—even if the interaction is paused or asynchronous.

- Use persistent agent dashboards that summarize current objectives, pending actions, and next steps.

- Leverage natural language recaps when users re-enter a thread (“While you were away, I confirmed your hotel and drafted your expense report”).

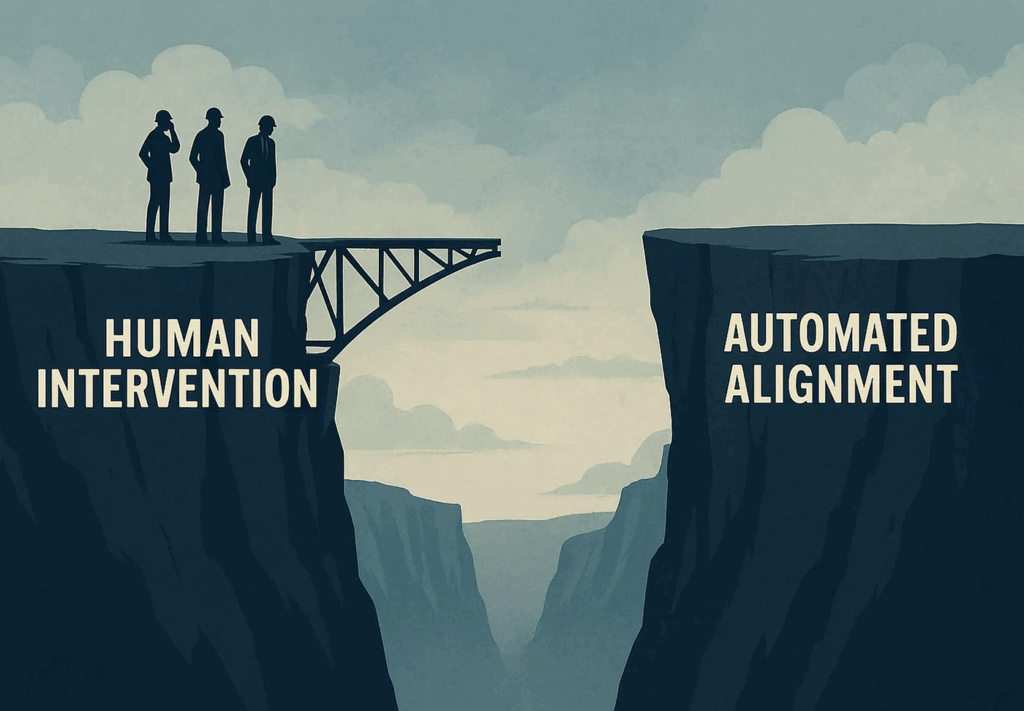

3. Interruptibility and Control

Autonomy doesn’t mean loss of control. Users should be able to:

- Intervene and override agent decisions.

- Pause or halt behaviors.

- Adjust parameters and preferences mid-task.

UX affordances for these controls must be accessible but unobtrusive, supporting both novice and power users.

4. Tone and Relational UX

Autonomous agents often act for the user rather than with the user. That relationship requires a tone that is confident but not authoritarian—collaborative, not condescending.

- Train agents to express intent, not assumption.

- Avoid over-promising outcomes (“I’ll try to…” vs. “I’ve solved it”).

- Use consistent agent personalities to foster familiarity over time.

The “AI-First” Design Mindset

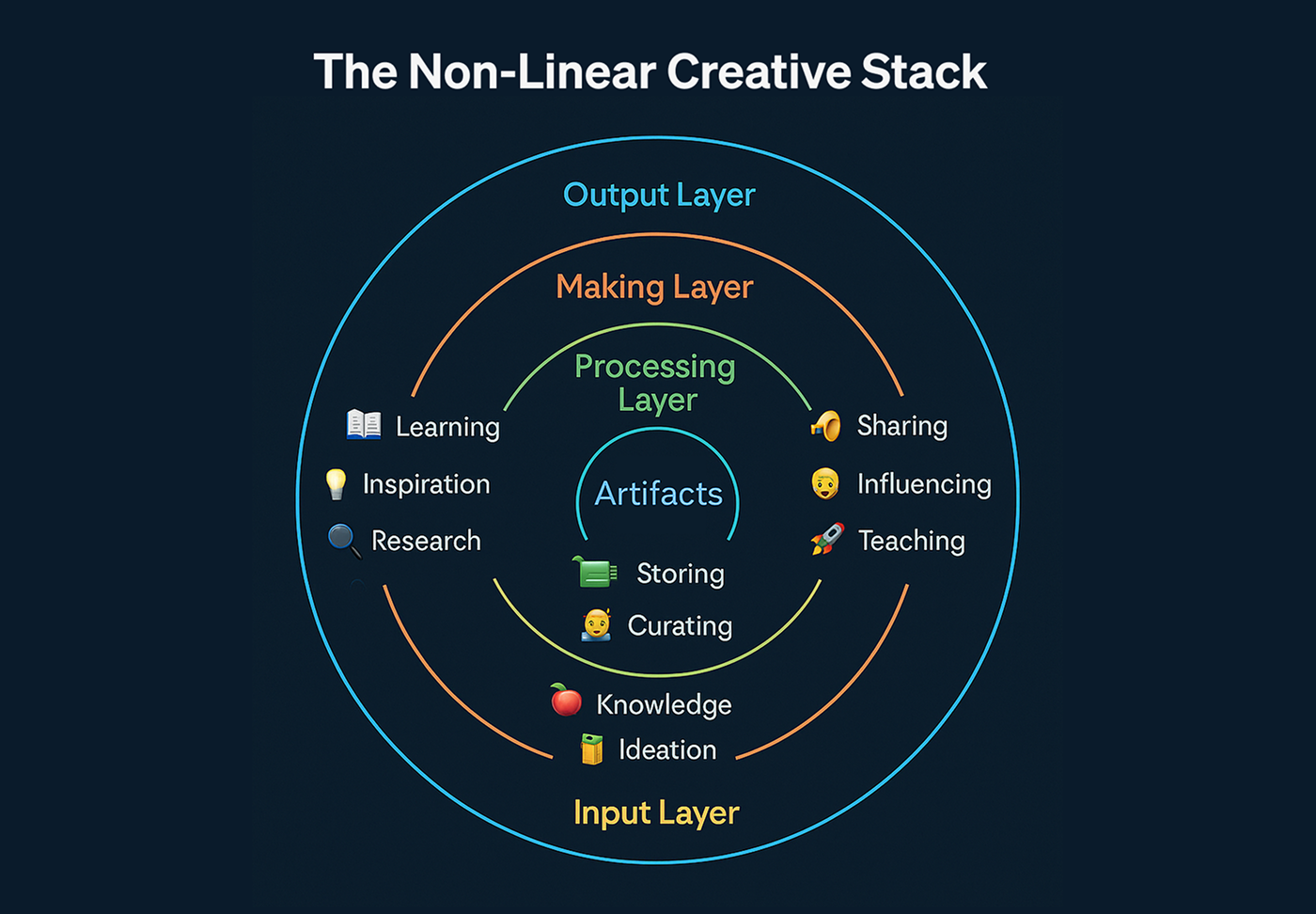

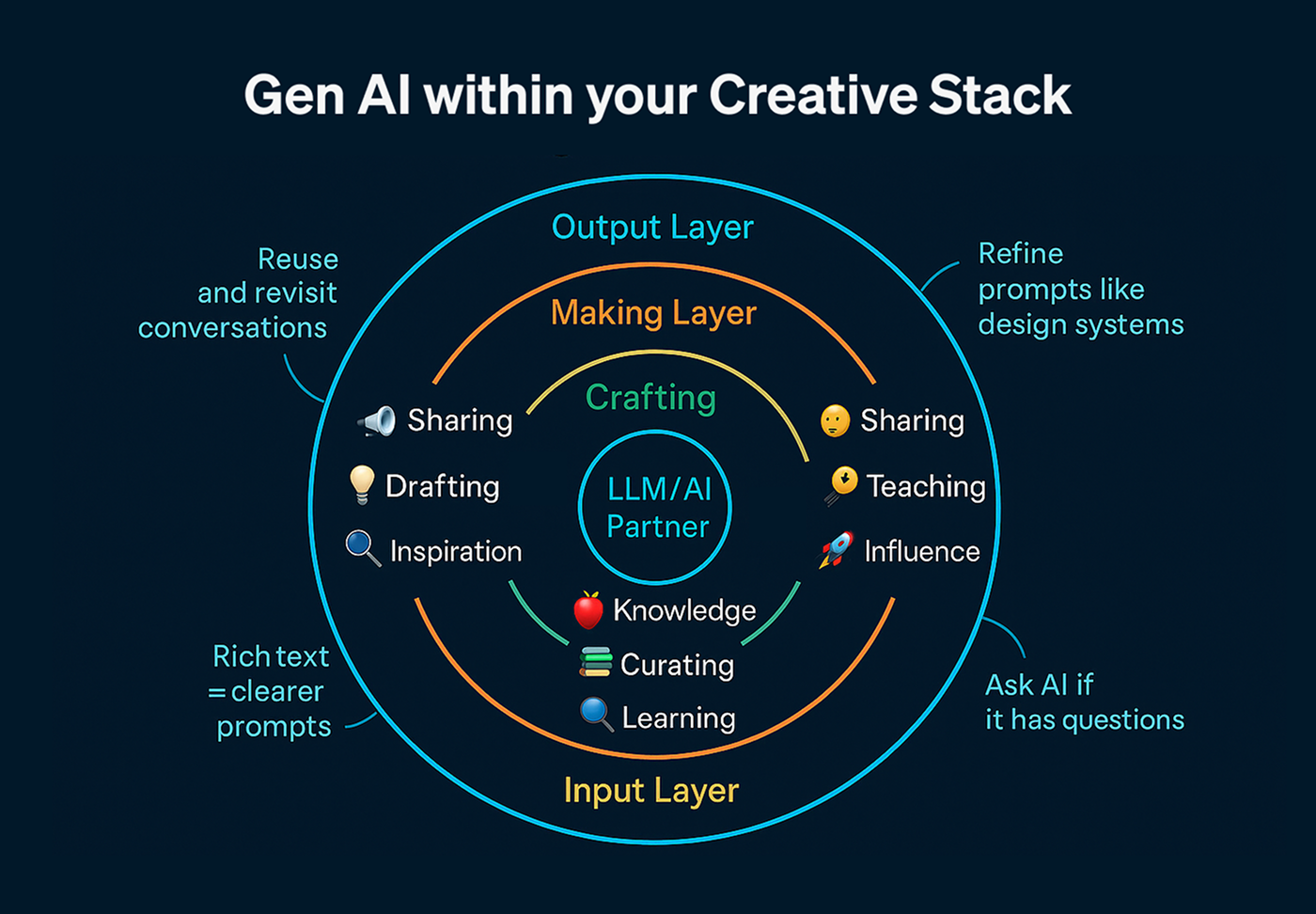

What ties all this together is a shift toward the AI-first design philosophy—treating AI agents not as features to fit into old UI patterns, but as primary actors in the product experience.

This mirrors what Robb Wilson calls “the invisible layer” in his book:

“Interfaces will become less visible, but the systems behind them more intelligent. The UX challenge is no longer about placement—it’s about partnership.”

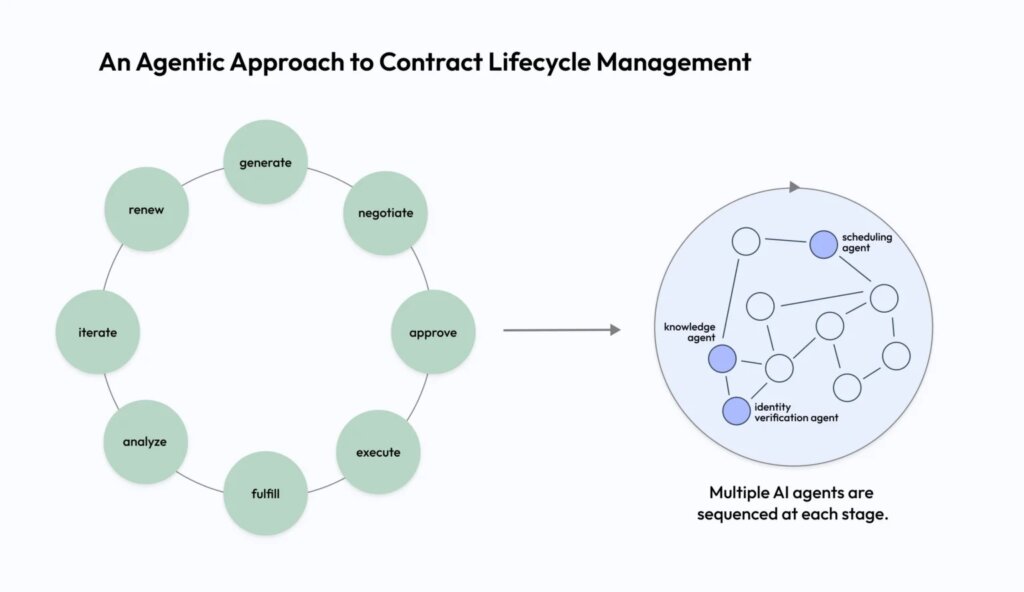

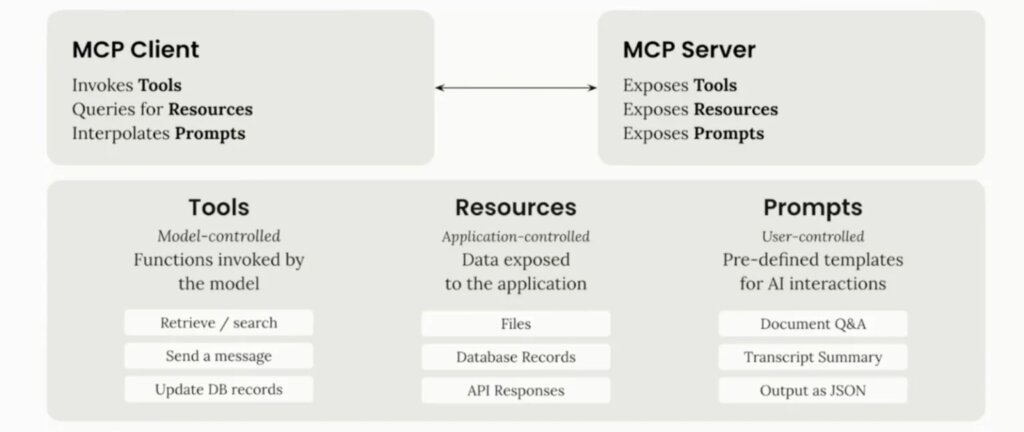

Companies like OneReach.ai are pioneering architectures where agents operate within runtime environments that allow for persistent goals, distributed memory, and composability across functions. These platforms demand a new design grammar—one where intent flows trump screens, and success depends on orchestration, not sequence.

From Theory to Practice: Starting Points for Design Teams

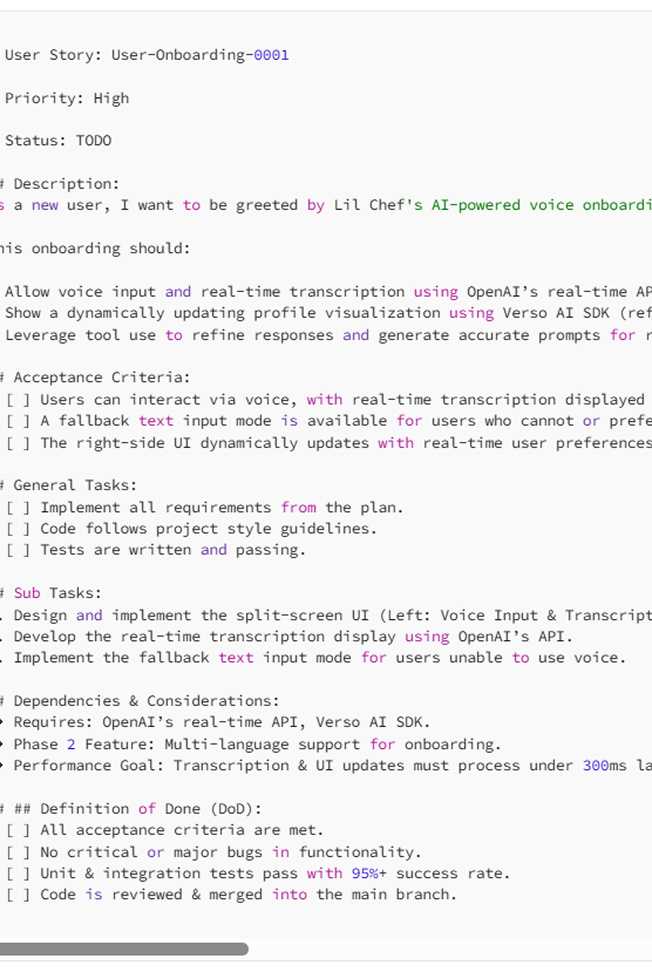

Here are a few first principles we’ve borrowed from an open source project called AI First Principles – a kind of Agile Manifesto for this AI moment, meant to help avoid the fragmentation, bureaucracy, and failures we’re seeing. Contributors include execs leading AI strategy at the federal government, large healthcare, Meta, Salesforce, Amazon, Microsoft, several startups, and one of the authors form the original Agile Manifesto (read the entire AI first principles manifesto: https://www.aifirstprinciples.org/manifesto) :

- Design around outcomes, not flows: Start with the goals users want to achieve, then build agent behaviors and UX affordances around them.

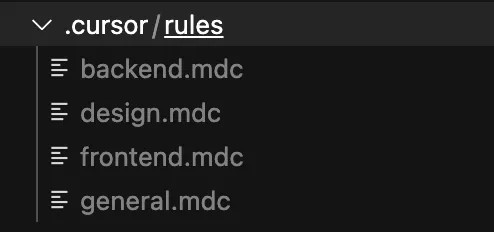

- Prototype agent behavior: Use scripts, roleplay, or logic models to define how agents should respond in different contexts—before committing to UI decisions.

- Create explainability as a service: Design reusable components that show “why” and “how” an agent acted, so every product doesn’t reinvent this wheel.

- Involve design early in runtime planning: Your UX team should be in the room when agent architectures and memory strategies are discussed—they directly impact experience.

Final Thoughts

Autonomous agents change the UX game. They don’t just challenge how we design interfaces—they challenge what an interface is. As AI becomes more proactive, design becomes more about choreography than control.

To keep pace, designers must embrace new mental models, new tools, and new responsibilities. We’re no longer just designing for screens—we’re designing for behavior, for trust, and for collaboration with intelligent systems that never sleep.

And if that sounds daunting, remember: we’ve done this before. Every time technology changed what was possible, design adapted to make it usable.

This time, we’re not just designing machines. We’re designing how machines act for us when we’re not watching.

Sources:

Wilson, Robb (with Josh Tyson). Age of Invisible Machines. Hoboken, NJ: Wiley, 2022. Wiley.com

Invisible Machines (podcast). Hosted by Robb Wilson. UX Magazine. https://uxmag.com/

AI First Principles. “AI First Principles Manifesto.” https://www.aifirstprinciples.org/manifesto (accessed August 15, 2025).

The post Designing for Autonomy: UX Principles for Agentic AI Systems appeared first on UX Magazine.