#179 – Mariya Moeva on the Impact of Google’s SiteKit on WordPress

Transcript

[00:00:19] Nathan Wrigley: Welcome to the Jukebox Podcast from WP Tavern. My name is Nathan Wrigley.

Jukebox is a podcast which is dedicated to all things WordPress, the people, the events, the plugins, the blocks, the themes, and in this case, how the Google Site Kit plugin is attempting to simplify their product offering, right inside of WordPress.

If you’d like to subscribe to the podcast, you can do that by searching for WP Tavern in your podcast player of choice, or by going to wptavern.com/feed/podcast, and you can copy that URL into most podcast players.

If you have a topic that you’d like us to feature on the podcast, I’m keen to hear from you and hopefully get you, or your idea. Featured on the show. Head to wptavern.com/contact/jukebox, and use the form there.

So on the podcast today we have Mariya Moeva. Mariya has more than 15 years of experience in tech across search quality, developer advocacy, community building and outreach, and product management. Currently, she’s the product lead for Site Kit, Google’s official WordPress plugin.

She’s presented at Word Camp Europe in Basel this year and joins us to talk about the journey from studying classical Japanese literature to fighting web spam at Google, and eventually shaping open source tools for the web.

Mariya talks about her passion for the open web, and how years of direct feedback from site owners shaped the vision for Site Kit. Making complex analytics accessible and actionable for everyone, from solo bloggers to agencies and hosting providers.

Site Kit has had impressive growth for a WordPress plugin, currently there are 5 million active installs and a monthly user base of 700,000.

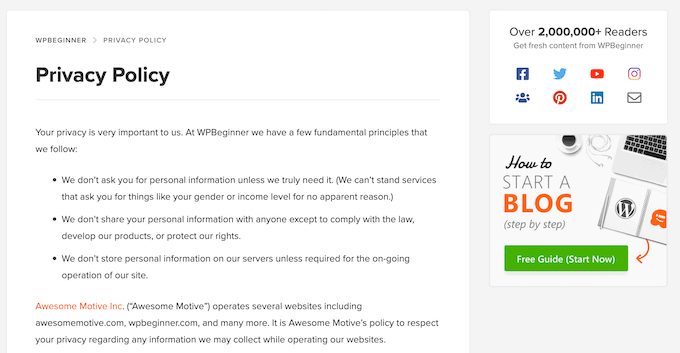

We learn how Site Kit bundles core Google products like Search Console, Analytics, Page Speed Insights, AdSense into a simpler, curated WordPress dashboard, giving actionable insights without the need to trawl through multiple complex interfaces.

Mariya explains how the plugin is intentionally beginner friendly with features like role-based dashboard sharing, integration with WordPress’ author and category systems, and some newer additions like Reader Revenue Manager to help site owners become more sustainable.

She shares Google’s motivations for investing so much in WordPress and the open web, and how her team is committed to active support, trying to respond rapidly on forums and listening closely to feedback.

We discussed Site Kit’s roadmap, from benchmarking and reporting features, to smarter, more personalized recommendations in the future.

If you’ve ever felt overwhelmed by analytics dashboards, or are looking for ways to make data more practical and valuable inside WordPress, this episode is for you.

If you’re interested in finding out more, you can find all of the links in the show notes by heading to wptavern.com/podcast, where you’ll find all the other episodes as well.

And so without further delay, I bring you Mariya Moeva.

I’m joined on the podcast by Mariya Moeva. Hello, Mariya. Nice to meet you.

[00:03:35] Mariya Moeva: Nice to be here.

[00:03:36] Nathan Wrigley: Mariya is doing a presentation at WordCamp Europe. That’s where we are at the moment, and we’re going to be talking about the bits and the pieces that she does around Site Kit, the work that she does for Google. Given that you are a Googler, and that we’re going to be talking about a product that you have, will you just give us your bio? I’ve got it written here, you obviously put one on the WordCamp Europe website. But just roughly what is your place in WordPress and Google and Site Kit and all of that?

[00:04:05] Mariya Moeva: Yeah. I mean, I’ve had a very meandering path. When you would look back to what I studied, which was, you know, classical Japanese literature, all these poems about the moon and the cherry blossoms, who would’ve thought at that time that I would end up building open source plugins? But I did have a meandering path and I ended up here because, mostly because of passion for the open web, and for all kinds of weird websites that exist out there. I really love stumbling upon something great.

I started Google on the web spam team, actually looking into the Japanese spam market, because of this classical Japanese literature degree and the Japanese skills. And then after a couple years or so, I basically despaired of humanity because all you look at is spam every day. Bad sites, hacked sites, malicious pages. And I just wanted to do something that makes the web better rather than removing all the bad stuff.

And so I switched over to an advocacy role, and in that role I essentially was traveling, maybe attending 20, 30 conferences every year, talking to a lot of people about their needs, what they have to complain about Google, what requests they have. And I would collect all of this feedback, and then I would go back to the product teams and I would say, hey, this and this is something that people really want. And they would say, thank you for your feedback.

Essentially at one point I said, okay, we’re going to build this thing, and that’s why I switched into product role. And I was able to take all the feedback over the years, that we’ve gotten from developers and site owners, and to try to build something that makes sense for them. So that’s how I ended up in the product role for building Site Kit.

And the idea from the very beginning was to make it beginner friendly and to make it from their perspective to match that feedback, rather than doing something that is like, here’s your stuff from analytics, here’s your stuff from Search Console, figure it out. That’s how we ended up building this and it’s been now five years. And it actually just a month ago entered the top 10 plugins. So clearly people find some value in it.

We have 700,000 people that use it every month. And overall it’s currently at 5 million active installs, meaning that these sites are kind of pinging WordPress so they’re alive and kicking. It’s been very encouraging to see that what we’re doing is helpful to people and we will keep going. There’s a lot to do.

[00:06:29] Nathan Wrigley: I think it’s kind of amazing because in the WordPress space, there are some of the, let’s call them the heavy hitters. You know, the big plugins that we’ve all heard of, the Yoasts of this world that kind of thing. Jetpack, all those kind of things. This, honestly has gone under the radar a bit for me, and yet those numbers are truly huge. Four and a half to 5 million people over a span of five years is really rather incredible.

[00:06:54] Mariya Moeva: It grew very fast, yeah.

[00:06:55] Nathan Wrigley: Yeah. And yet it’s not one that, well, I guess most people are reaching out to plugins to solve a problem, often a business problem. So, you know, there’s this idea of, I install this and there’s an ROI on that. This is not really that, not really ROI, it’s more site improvement. Okay, here’s a site that needs things fixing on it. Here’s some data about what can be fixed. And so maybe for that reason and that reason alone, it’s flown under the radar for me because it doesn’t have that commercial component to it.

[00:07:24] Mariya Moeva: Yeah, for sure. It’s for free and it’s not something that, yeah, sells features or has like a premium model and we don’t market it so much. But I run a little survey in the product where people tell us where they heard from it, and a lot of the responses are either YouTube video, or like blog posts or word of mouth. So it seems to be spreading more that way.

[00:07:46] Nathan Wrigley: Yeah, no kidding. I’ll just say the URL out loud in case you’re at a computer when you’re listening to this. It’s SiteKit, as one word, dot withgoogle.com. I don’t know if that’s the canonical URL, but that’s where I ended up when I did a quick search for it. So sitekit.withgoogle.com. And over there you’ll be able to download well, as it labels itself, Google’s official WordPress plugin.

The first thing that surprises me is, a, Google’s interest in WordPress. That is fascinating to me. I mean, obviously we all know, Google is this giant, this leviathan. Maybe you’ve got interest in other CMSs, maybe not. I don’t really know. But I think that’s curious. But obviously 43% of the web, kind of makes sense to partner with WordPress, doesn’t it? To improve websites.

[00:08:31] Mariya Moeva: Yeah. I work with plenty of CMSs. I work with Wix, with Squarespace, and we essentially what I try to do and what my team tries to do, we are called the Ecosystem Team. So we want to bring the things that we think would be useful to site owners and businesses directly to where they are.

So if you are in your Wix dashboard, you should be able to see the things from Google that are useful. And same if you are in WordPress. And obviously WordPress is, orders of magnitude, a bigger footprint than any of the others. And also it has this special structure where everything is decentralised and people kind of mix and match. So that’s why we went with the plugin model. And using the public APIs, we want to show what’s possible.

Because all the data that we use is public data. There’s no special Google feature that only the Google product gets, right? We are just combining it in interesting ways because I’ve spent so much time talking to people, like what they need. And so we just curate and combine in ways that are actually helping people to make decisions and to kind of clear the clutter.

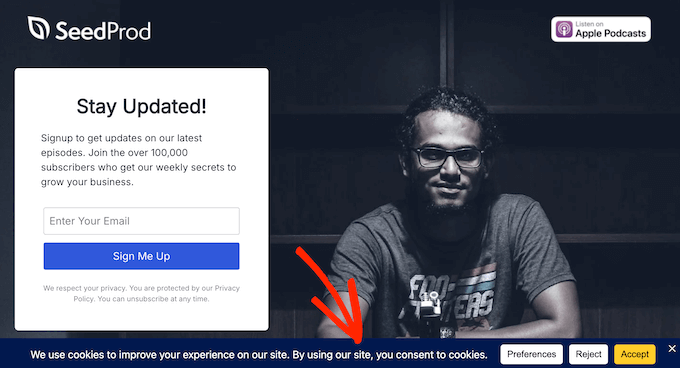

Because when you go to analytics, it’s like 50 reports and so many menus and it’s like, where do I start? So we try to give a starting point in Site Kit. And we also try to help with other things like make people sustainable. One thing that we recently launched just a month ago is called Reader Revenue Manager. So you can put a little prompt on your site, which asks people to give you like $2 or whatever currency you are in, or even put like a subscription.

And so the idea is you don’t have to have massive traffic in order to generate revenue from your content. If you have your hundred thousand loyal readers, they can help you be more sustainable. So we’re looking at these kind of features, like what can we launch that is more for small and medium sites and would be helpful? And how can we make it as simple as possible? So that people don’t kind of drop off during the setup because it’s too complicated.

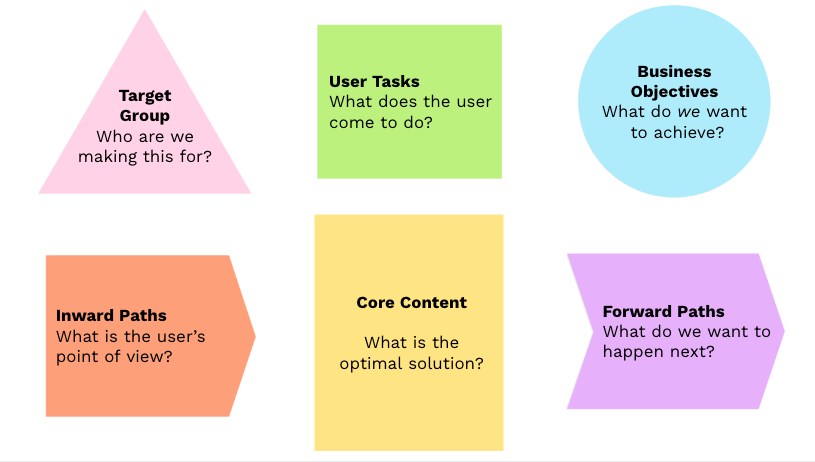

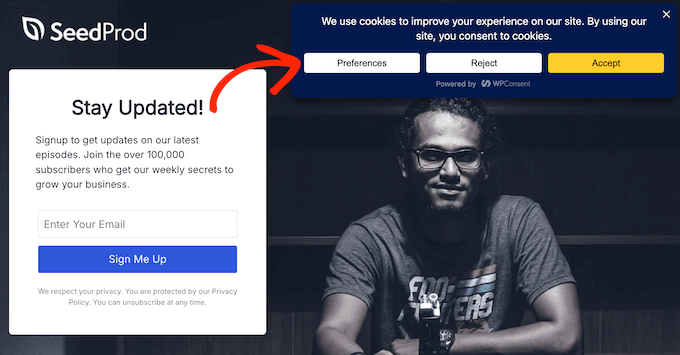

[00:10:33] Nathan Wrigley: Would it be fair to summarise the plugin’s initial purpose as kind of binding a bunch of Google products, which otherwise you would have to go and navigate to elsewhere? So for example, I’m looking at the website now, Search Console, Analytics, Page Speed Insights, AdSense, Google Ads, and all of those kind of things. Typically we’d have to go and, you know, set up an account. I guess we’d have to do that with Site Kit anyway. But we’d have to go to the different URLs and do all of that.

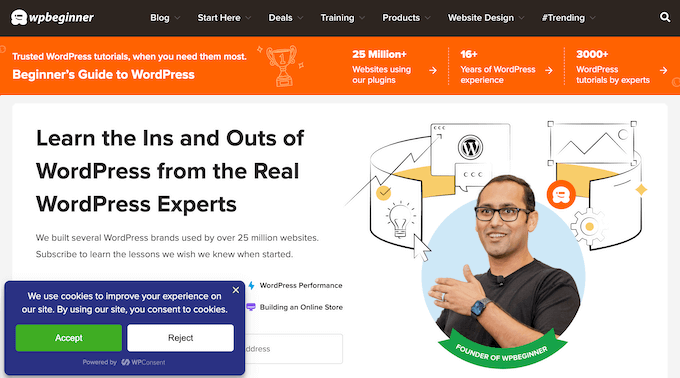

The intention of this then is to bind that inside of the WordPress UI, so it’s not just the person who’s the admin of that account. You can open it up so that people who have the right permissions inside of WordPress, they can see, for example, Google Analytics data. And it gets presented on the backend of WordPress rather than having to go to these other URLs. Is that how it all began as a way of sort of surfacing Google product data inside the UI of WordPress?

[00:11:21] Mariya Moeva: Yeah, we wanted to bring the most important things directly to where people are, so they don’t have to bother going to 15 places. And we wanted to drastically decrease and curate the information so that it’s easy to understand, because when you have 15 dashboards in Analytics and 15 dashboards in Search Console, and then you have to figure out what to download and in which spreadsheet to merge and how to compare, then this is. Maybe if you have an agency taken care of, they can help you. But if you don’t, which 70% of our users say that they’re one person operation, so they’re taking care of their business, and on top of that, the website. We wanted to make it simpler to understand how you’re doing, and what you should do next with Google data.

[00:12:02] Nathan Wrigley: So it’s a curated interface. So it’s not, I mean, maybe you can pull in every single thing if you so wish. But the idea is you give a, I don’t know, an easier to understand interface to, for example, Google Analytics.

That was always the thing for me in Google Analytics. I’m sure that if you have the time and the expertise, like you’re an agency that deals with all of that, then all of that data is probably useful and credible. But for me, I just want to know some top level items. I don’t need to dig into the weeds of everything.

And there was menus within menus, within menus, and I would get lost very quickly, and dispirited and essentially give up. So I guess this is an endeavor to get you what you need quickly inside the WordPress admin, so you don’t have to be an expert.

[00:12:43] Mariya Moeva: Yeah. And then it gets more powerful when you are able to combine data from different products. So, for example, we have a feature called Search Funnel in the dashboard, which lets you, it combines data from Search Console on search impressions and search clicks, and then it combines data from Analytics on visitors on the site and conversions. So it kind of helps you map out the entire path, versus having to go over here, having to go over there, having to combine everything yourself. So when you combine things, then it gets also more powerful.

We have another feature which lets you combine data from AdSense and Analytics. So if you have AdSense on your site, you can then see which pages earn you the most revenue. So when you have that, suddenly you can see, okay, so I have now these pages here, what queries are they ranking for? How much time people spend on them? Can I expand my content in that direction? It helps you to be more focused in kind of the strategy that you have for your site.

[00:13:45] Nathan Wrigley: Is it just making, I mean, I say just, is it making API calls backwards and forwards to Google’s Analytics, Search Console, whatever, and then displaying that information, or is it kind of keeping it inside the WordPress database?

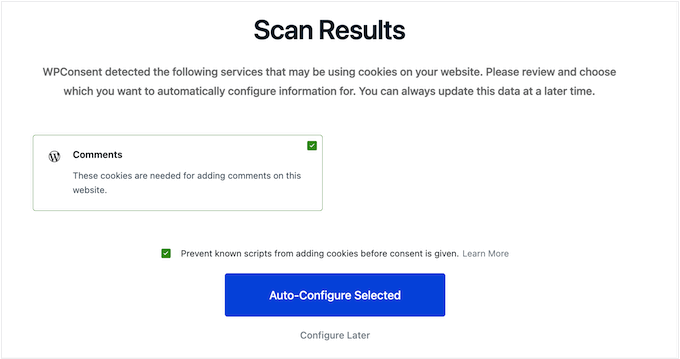

[00:13:58] Mariya Moeva: We don’t store anything, well, almost anything. Yeah, we wanted to keep the data as secure as possible, so we created this proxy service, which kind of helps to exchange the credentials. So the person can authenticate with their Google account, and then from there, the data is pulled via API, and we cache the dashboard for one hour. After that we refreshed authentication token. From the data itself, nothing is stored.

[00:14:23] Nathan Wrigley: So it’s just authentication information really that’s stored. Well, that’s kind of a given, I suppose. Otherwise you’ll be logging in every two minutes.

[00:14:29] Mariya Moeva: Right. So that’s the model that we have because we really wanted people to be able to access this data, but also to keep it secure. And because of how the WordPress database is, we didn’t feel like we could save it there.

[00:14:41] Nathan Wrigley: It sounds from what you’ve just said, it’s as if it’s combining things from a variety of different services, kind of linking them up in a structured way so that somebody who’s not particularly experienced can make connections between, I don’t know, ads and analytics. The spend on the ads and the analytics, you know, the ROI if you like.

Does it do things uniquely? Is there something you can get inside of Site Kit which you could not get out of the individual products if you went there? Or is it just more of a, well, we’ve done the hard work for you, we’ve mapped these things together so you don’t have to think about it?

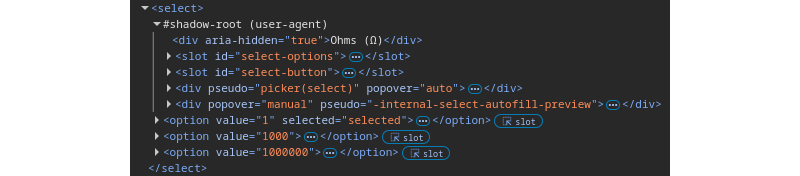

[00:15:10] Mariya Moeva: The one thing that it does that I’m super excited about, and we’ll build on that, but we have the fundamental of it now, is it actually creates data for you. Because in contrast to Search Console or Analytics or all these other, which are kind of Google hosted, they can only tell you like a long help center article, go there on your site, then click this, then paste this code, right? They cannot help you with this, whereas Site Kit is on the website.

So if you agree, which we don’t install anything without people’s consent, like they have to activate the feature, but if you agree, then we can do things on your behalf. So for example, we can track every time someone clicks the signup button and we can generate an analytics event for you, even if that plugin normally doesn’t send analytics events. And that way, suddenly you have your conversion data available.

So very often people look to the top of the funnel, like how many people came to my site? But they don’t look to what these people did beyond kind of, oh, they stayed two minutes. So what does this mean? You want to see, did they buy the thing? Did they sign up for the thing, or subscribe or whatever it is? And we help create this data because we have this unique access to the source code of the site.

So we create, for example, on leads generation or purchases. We also, every time that a specific page is viewed, we will generate an event about the author of the page. So then we can aggregate the data, which authors bring in the most page views. Let’s say you have like a site with five, six, whatever authors. Or which categories are bringing in the most engagement and these kind of things.

[00:16:52] Nathan Wrigley: So it really does get very WordPressy. It’s not just to do with the Google side of things. It is mapping information from Google, so categories, author profiles, that kind of thing, and mapping them into the analytics that you get. Okay, that’s interesting. So it’s a two-way process, not just a one-way process.

[00:17:09] Mariya Moeva: Yeah. It’s very much integrated with WordPress. We have also a lot of other features, like for example, that kind of stretch into other parts of the website. So this Reader Revenue Manager that I mentioned before with the prompts that you can put on your pages. You can go to the individual post and for every post there’s like a little piece of control UI that we’ve added there in the compose screen, where you can say, this is excluded from this prompt, or, you know, you can control from there.

So we try to integrate where it makes sense, like where the person would want to take this action. And again, because it’s on the website, we can kind of spread out beyond just this one dashboard.

[00:17:48] Nathan Wrigley: And would I, as a site admin, would I be able to assign permissions to different user roles within WordPress? So for example, an editor, or a certain user profile, may be able to see a subset of data. You know, for example, I don’t know, you are involved in the spending on AdSense. But you, other user over there, you’ve got nothing to do with that. But you are into the analytics, so you can see that, and you over there you can see that. Is that possible?

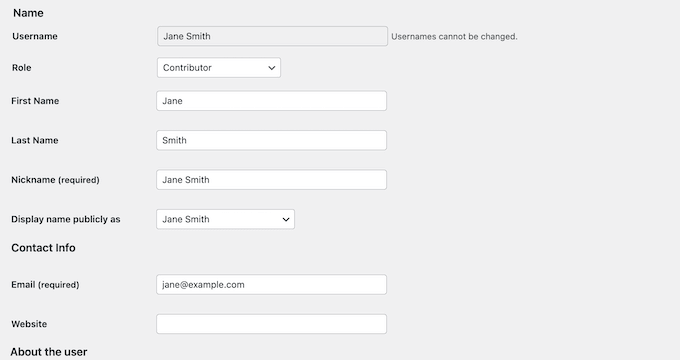

[00:18:12] Mariya Moeva: We have something called dashboard sharing. So it has the same, like if you use Google Docs or anything like that, it has this little person with a plus in the corner, icon. And then from there, if you are the admin who set up this particular Google Service, who connected it to Site Kit, then you’re able to say who should be able to see it. So you essentially grant view only access to, let’s say all the editors, or all the contributors or whatever. And then you can choose which Google service’s data they can see.

[00:18:44] Nathan Wrigley: So yes is the answer to that, yeah.

[00:18:46] Mariya Moeva: Yeah, yeah. So they don’t have to set it up, I mean, they have to go through a very simplified setup, and then they basically get a kind of a screenshot. I mean it’s, you can still click on things, but you can’t change anything, so it’s kind of a view-only dashboard.

[00:18:59] Nathan Wrigley: I’m kind of curious about the market that you pitch this to. So sell is the wrong word because it’s a free plugin, but who you’re pitching it at. So obviously if you’ve got that end user, the site owner. Maybe they’ve got a site and they’ve got a small business with a team. Maybe it’s just them, so there’s the whole permissions thing there.

But also I know that Google, there are whole agencies out there who just specialise in Google products, and analysing the data that comes out of Analytics. Can you do that as well as an agency? Could I set this up for my clients and have some, you know, I’ve got my agency dashboard and I want to give this client access to this website, and this website and this website, but not these other ones? Can it be deployed on a sort of agency basis like that?

[00:19:38] Mariya Moeva: You would still have to activate it for every individual site. So in that sense, there’s a bunch of steps that you have to go through. But once it’s activated, you can then share with any kind of client. And actually we have a lot of agencies that can install it for every site that they have.

Just today someone came and after he saw the demo, he was like, okay, I’m going to install it for all my clients. Because what we’ve heard is that it’s exactly the level of information that a client would benefit from. And this means then that they pester the agency less. So we’ve literally heard people saying, you’re saving me a lot of phone calls. So that’s why agencies really like it.

And the next big feature request, which we’re working on right now, is to generate like an email report out of that. So for those who don’t even want to log into WordPress to see, there will be a possibility to get this in their inbox.

[00:20:30] Nathan Wrigley: So you could get it like a weekly summary, whatever it that wish to trigger. And, okay, so that could go anywhere really. And then your clients don’t even need to phone you about that.

[00:20:41] Mariya Moeva: Yeah. So we are trying to really actively reach people where they are, even if that’s their email inbox.

[00:20:49] Nathan Wrigley: And the other question I have is around your relationship with some of the bigger players, maybe hosting companies. Do you have this pre-installed on hosting cPanels and their, you know, whatever it is that they’ve got in their back end?

[00:21:02] Mariya Moeva: Yeah, we have quite a few hosting providers that pre-install it for their WordPress customers. The reason for this is that they see better lifetime value for those customers that have a good idea of how their site is doing. And yeah, Hostinger is one of those. cPanel. Elementor pre-installs it for all of their users. And they see very good feedback because again, it’s super simple to set up and super easy to understand once you have it. So for them it’s kind of like an extra feature that they can offer, extra value to their users for free.

[00:21:32] Nathan Wrigley: We know Google’s a fabulous company, but you don’t do things for nothing. So what’s the return? How does it work in reverse? So we know that presumably there must be an exchange of data. What are we signing up for if we install Site Kit?

[00:21:47] Mariya Moeva: So, at least, I mean, Google is a huge company, right? There’s hundreds of thousands of people working. So I can’t speak for the whole of Google, but I can speak for the Ecosystem Team, which I’m part of, like the web ecosystem.

The main investment here, or the main goal for us is that the open web continues to thrive, because if people don’t put content, interesting, relevant content on the open web, the search results are going to be very poor and that’s not a good product.

So our idea is to support all the people who create content to make sure that they’re found, like if you’re a local business, that people can find you when they need stuff from that particular local business. And what we see is that, especially for smaller and medium sites, they really struggle, first with going online, and then with figuring out what they’re supposed to do. And so a lot of them give up because in comparison to other platforms, it’s a little bit of an upfront investment, right? Like you have to pay for hosting, you have to set up the site, you have to add content.

So we try to help people as much as we can to see the value that the open web brings to them, so that they can continue to create for the open web. So that’s our hidden motivation. I think in that sense, we’re very much aligned with the WordPress community because here everybody cares about the open web and for all kind of small, weird websites to continue flourishing and get their like 100 or 300 or 1,000 readers that they deserve.

So that’s the motivation. I think because it includes other things like AdSense and AdWords, like people can set up a ads campaign directly from Site Kit in a very simplified flow, and the same thing for AdSense. Obviously some money exchanges hands, but this is relatively minor compared to the benefit that we think there is for the web in general.

[00:23:35] Nathan Wrigley: Google really does seem to have a very large presence at WordPress events. I mean, I don’t know about the smaller ones, you know, the regional sort of city based events, but at the, what they call flagship events, so WordCamp Asia and WordCamp Europe and US, there’s the whole sponsor area. And it’s usual to see one of the larger booths being occupied by Google. And I wonder, is it Site Kit that you are talking about when you are here or is it other things as well?

But also it’s curious to me that Google would be here in that presence, because those things are not cheap to maintain. So there must be somebody up in Google somewhere saying, okay, this is something we want to invest in. So is it Site Kit that you are basically at the booth talking about?

[00:24:19] Mariya Moeva: So me, yes, or people on my team. We have like a Site Kit section this year. There’s also Google Trends. There’s also some other people talking about user experience and on search. And this changes depending on which teams within Google want to reach out to the WordPress community.

But with Site Kit, we’ve been pretty consistent for the last six years. We are always part of the booth. But the kind of whole team, like the whole Google booth content has kind of changed over the years as well depending on who’s coming.

[00:24:51] Nathan Wrigley: I know that a lot of work being done is surrounding performance and things like that, and a lot of the Google staff that are in the WordPress space seem to be focused on that kind of thing, talking about the new APIs that are shipping in the browsers and all of those kind of things.

Okay, so on the face of it, a fairly straightforward product to use. But I’m guessing the devil is in the detail. How do you go about supporting this? So for example, if I was to install it and to run into some problems, do you have like a, I don’t know, a documentation area or do you have support, or chat or anything like that? Because I know that with the best will in the world, people are going to run into problems. How do people manage that kind of thing?

[00:25:27] Mariya Moeva: Yeah, this was something that I was super, I felt really strongly about based on my previous experience in the developer advocate world. Because very often I got feedback that it’s super hard to reach Google. And it’s also understandable given the scale of some of the products.

But when I started this project I insisted that we allocate resources for support. So we have two people full-time support. One of them is upstairs, the support lead. He knows the product inside and out. They’re always on the forum, the plugin forum, support forum. And they answer usually within 24 hours. So everybody who has a question gets their question answered.

We’ve also created the very detailed additions. When you have Site Kit, you also get a few additions to the Site Health forum, so you can share that information with them and they see like detailed stuff about the website so they can help debug. And in many, many cases, I’ve seen people coming pretty angry, leave a one star review, then James or Adam who are support people, engage with them, and then it turns into a five star review because they feel like, okay, someone listened to me and helped me figure out what is going on.

We have real people answering questions relatively quickly. And they don’t just go, of course they focus on the WordPress support forum, but they also check Reddit and other places where people like mentioned Site Kit, and they try to help and to direct them to the right place. So for Site Kit, we have very robust support.

Now, when it’s an issue with a product, a Google product that is connected to Site Kit, so it’s not a Site Kit problem, let’s say you got some kind of strange message from AdSense about your account status changing. Then we would have to hand over to the AdSense account manager or support team that they have, because we don’t know everything, like how AdSense makes decisions and stuff like that. But for anything Site Kit related, we are very fast to answer.

[00:27:22] Nathan Wrigley: That’s good to hear because I think you’re right. I think the perception with any giant company is that it kind of becomes a bit impersonal, and Google would be no exception. And having just a forum which never seems to get an answer, you drop something in, six months later, you go back and nobody’s done anything in there except close the thread, kind of slightly annoying. But something like this. So 24 hours, roughly speaking, is the turnaround time.

[00:27:45] Mariya Moeva: Yeah. I mean, not on the weekend, but yeah.

[00:27:46] Nathan Wrigley: Yeah. Still, that’s pretty amazing.

[00:27:47] Mariya Moeva: Yeah, yeah. We are very serious about this because, I mean, also the WordPress community is really strong, right? So you want to show that we care. We want to hear from people. A lot of bugs then also turn into feature requests and get prioritised to be developed. So, yeah, we really value when people come to complain. It’s a good thing.

[00:28:03] Nathan Wrigley: Excellent. Okay, well, we won’t open that as a goal, please send in your complaints. But nevertheless, it’s nice that you take it seriously.

So it sounds like it’s under active development. You sound like this is basically what you’re doing over at Google. Do you have a roadmap? Do you have a sort of laundry list of things that you want to achieve over the next six months? Interesting things that we might want to hear about.

[00:28:21] Mariya Moeva: Sure, yeah. I mean, my ultimate vision, which is not the next six months, I would love to move away as much as possible from just stats. As curated and as kind of structured as it is right now, and get more into like recommendations, and like to-do list. Because what I hear from people again and again, it’s like, I have two hours this month, tell me what should I do with those two hours?

So they’re asking a lot from us. They’re asking essentially to look, analyse everything and to prioritise their tasks, to tell them which one is the most important or most impactful. And this is like several levels of analysis further than where we are now.

So one thing that we are looking to work on is benchmarking, because you cannot know are you growing or not, unless you know how you’re doing on average. And today, people who are a little bit more savvy can do this of course, but a lot of people don’t. And so for us to be able to tell you, not just you got 20 clicks this week, but also this is okay for you, or this is better than last year, this time, or this is better than your competitors. I think that’s a really valuable way to interpret the data and to help people understand what it means.

[00:29:38] Nathan Wrigley: Yeah. And really, Google is one of the only entities that can provide that kind of data.

[00:29:44] Mariya Moeva: Especially for search.

[00:29:45] Nathan Wrigley: Yeah, especially against competitors. That’s really interesting because analysing the data, whilst it’s fun for some people, I feel it’s not that interesting for most people. And so just having spreadsheets of data, charts of data, it’s interesting and you no doubt gain some important knowledge from it. But being told, here’s the outcomes of that data, try doing this thing and try doing that thing, that is much more profound than just demonstrating the data.

And I’m guessing, I could be wrong about this, and I’ve more or less said this in every interview over the last year, I’m guessing there’s an AI component to all of that. Getting AI to sort of analyse the data and give useful feedback.

[00:30:22] Mariya Moeva: I mean, we are investigating how to do all of these things. I think in the case of WordPress, it’s a little bit trickier again, because of the distributed nature, and the fact that all the site information lives on the site and then all the Google information. So we’re not like fully hosted where you can access everything and control everything, something like a Squarespace or a Wix.

But there’s definitely, like AI is a perfect use case for this, right? Like benchmarking, you can bucket sites into relevant groups and then see, are they performing better or worse? That’s like classic machine learning case. And we will see exactly, technically, how we’re going to reach this, but that’s one of the things that we’re working on right now.

Another thing is to expand much more the conversion reporting and to help people understand, are they achieving their goals? Because this is something that surprisingly to me, so many people pay money and invest time in the site, and they cannot articulate what the site is doing. Is it working? Is it doing its job? And they’re like, well, like I got some people visiting. And I’m like, did they buy the thing? So you have to know what to

track, and then also to take action after you see the metrics, like to move them in one direction or another. And so helping people like map out this full funnel is one thing that we’re working on. And the other thing is also this email report.

[00:31:40] Nathan Wrigley: Yeah, that’s amazing. So really under active development. And you sound very impassioned about it. You sound like this has become your mission, you know?

[00:31:47] Mariya Moeva: I think, nobody ever complained that something is easy, right? When you make things simple and easy for people, they appreciate, even if they’re more knowledgeable than if they can do more advanced things themselves.

And I personally really care, like every time that I find a random website with really strange content, but just, someone put their soul into it. I recently found something in Zurich of like tours of Zurich, walking tours, by someone who really cares about history and architecture.

And it’s a terrible website design wise, but the content is amazing. And I was like, okay, this person could use some help, but he’s doing, or she’s doing like a great job at the content part, and then should get the traffic that they deserve for this. So that’s what motivates me also to come here.

One person, two or three WordCamps ago came over and was saying, everything about Google is hard except Site Kit. And I was like, yeah, that’s what we are trying to do. We really want to simplify things for you. So, yeah, being here is also super motivating. To talk to people and to hear feedback and feature requests. And again, we like when people come to complain.

[00:32:54] Nathan Wrigley: Well, I was just speaking to a few people prior to you entering the room and those few people all have Site Kit installed on their site. So you’re doing something right.

[00:33:02] Mariya Moeva: I hope it’s helpful. I hope it answers some questions and saves people some time. That’s what we are trying to do. Yeah, we are in the part of Google that has the ecosystem focus, so we know that ecosystem changes take longer. I mean, still it’s a fast growing plugin. It got to 5 million in 5 years, but still that’s 5 years. And in the context of software companies which move very fast, 5 years is a long time.

Yeah, we will keep going and hopefully more people can benefit from it. But we do have, yeah, still there are many people who come by and they’re like, whoa, what is this? Show me.

[00:33:36] Nathan Wrigley: Well, that’s nice. There’s for growth as well.

[00:33:38] Mariya Moeva: Yeah, yeah. For sure. I mean, for sure there’s always, and more people create new sites. So, again, going back to that hosting provider question of like, can we bring it to them at the moment of creation so that they know this is something I can use?

[00:33:50] Nathan Wrigley: Yeah. So one more time, the URL is sitekit.withgoogle.com. I will place that into the show notes as well.

Mariya, I think that’s everything that I have to ask. Thank you so much for chatting to me about Site Kit.

[00:34:01] Mariya Moeva: Yeah, thank you for the invitation. It’s been a pleasure to talk about the ecosystem. And, yeah, if people have feature requests, they can always write us either on GitHub in the Site Kit repo, or on the support forum, or if they are coming to any WordCamp where we also are, we are also super happy to hear. So we always love to know what people struggle with, so that we can build it for them and make it easy.

[00:34:23] Nathan Wrigley: Thank you very much indeed.

On the podcast today we have Mariya Moeva.

Mariya has more than 15 years of experience in tech across search quality, developer advocacy, community building and outreach, and product management. Currently she’s the product lead for Site Kit, Google’s official WordPress plugin. She’s presented at WordCamp Europe in Basel this year, and joins us to talk about the journey from studying classical Japanese literature to fighting web spam at Google, and eventually shaping open source tools for the web.

Mariya talks about her passion for the open web and how years of direct feedback from site owners shaped the vision for Site Kit, making complex analytics accessible and actionable for everyone, from solo bloggers to agencies and hosting providers.

Site Kit has had impressive growth for a WordPress plugin, currently there are 5 million active installs and a monthly user base of 700,000.

We learn how Site Kit bundles core Google products, like Search Console, Analytics, PageSpeed Insights, AdSense into a simpler, curated WordPress dashboard, giving actionable insights without the need to trawl through multiple complex interfaces.

Mariya explains how the plugin is intentionally beginner-friendly, with features like role-based dashboard sharing, integration with WordPress’ author and category systems, and some newer additions like Reader Revenue Manager to help site owners become more sustainable.

She shares Google’s motivations for investing so much in WordPress and the open web, and how her team is committed to active support, trying to respond rapidly on forums and listening closely to feedback.

We discuss Site Kit’s roadmap, from benchmarking and reporting features to smarter, more personalised recommendations in the future.

If you’ve ever felt overwhelmed by analytics dashboards, or are looking for ways to make data more practical and valuable inside WordPress, this episode is for you.